■ Features

AI is often treated with a lot of scepticism, particularly in the creative industry, where people are (rightfully) worried about the preservation of human creativity and the issues regarding originality and copyright associated with anything generated by an algorithm.

In reality, the advent of AI tools opens many doors for music producers across composition, arrangement, mixing, and mastering. While there will be cases of misuse, when applied appropriately, these tools have the potential to enhance your projects.

In my day job as a development researcher, I frequently delve into new AI technology and its possibilities for enhancing creativity in the world of media. For instance, I have used stem separators like Voice.ai to extract sounds for soundtracking a section of a sizzle reel when previously, there would have been no other way for me to take sections or elements and weave them into a new soundbed.

In this article, we will examine several freely available AI tools, their growing role in music production, and how you can apply them to your own process.

Many of the most successful musicians in the world are already adopting AI into their practice. Ninja Tune affiliate Actress released a mini LP in collaboration with a learning programme called ‘Young Paint’, which aims to explore ‘complex simplicity and reimagine it as a sort of sonic paint with splats, sprays, splashes, dots in an Impressionistic fashion’.

Meanwhile, Holly Herndon’s 2019 album included sounds produced by an AI trained on voices and instrumental samples. A couple of years later, she trained a ‘computer musician’ - a deepfake of her voice called Holly+. The singer claims vocal deepfakes are ‘here to stay’ and that people should be encouraged to explore exciting new music-making tech while protecting artists. This project she deemed an experiment in ‘communal voice ownership’.

Similarly, Grimes recently released software called Elf.tech, essentially an AI clone of her own voice, that can be used to create music with a 50/50 profit split between the creator and her. She also presented the move as ‘communal voice ownership’, arguing that her company ‘found a way to allow people to perform through my voice, reduce confusion by establishing official approval, and invite everyone to benefit from the proceeds generated from its use.’ This music then gets ‘released’ with the alias GrimesAI listed as an artist with the co-creator. This profile has over 100,000 monthly listeners on Spotify! More recently, FKA Twigs is another artist who has announced she is cloning her voice.

Finally, alongside ambient extraordinaire Brian Eno’s 26th studio album Reflection, the artist also released a generative version of the single 54-minute piece on an app. This version plays infinitely and changes depending on the time of day:

"My original intention with Ambient music was to make endless music, music that would be there as long as you wanted it to be. I wanted also that this music would unfold differently all the time – ‘like sitting by a river’: it’s always the same river, but it’s always changing".

One thing all these artists have in common is that they are using AIs trained on their own copyright. And if you’re nerdy enough to be training your own AI, you are probably way ahead of the curve and clued up on the legislature on ownership. Put simply, at the moment if you are looking to use other AIs to enhance your music production, any ‘original music’ generated by AI is currently considered public domain.

So while you could incorporate very short sections or use AI-generated content as the inspirational basis for a track, claiming to be the artist behind a piece that you haven’t composed yourself is fraudulent. This is most relevant when it comes to composition and melody generation.

These are AI programmes that can create ‘original’ melodies and harmonies.

One of the most impressive text-to-audio generators I’ve seen. In just a few sentences, with Udio, you can prompt the AI with a style and themes you want, and bosh! A minute or so later, it will produce an audio file that matches your description. It even generates lyrics, which often deserve at least a wry smile for the programme’s attempt, if not a hats off.

With full track generators like this, you should only use them as inspiration tools. For example, if you want to write a dream-pop track about sweetcorn, you could prompt it with this to generate something that might spark the writing process for you, as it will instantly conjure the kind of vibe you’re going for. Remember, you do not own anything the AI programme generates, even if you’re the one to prompt it.

For full disclosure, I haven’t used Beatoven.ai, but it’s another well-regarded AI music generator that looks quite versatile. It allows you to do text-to-music generation and customise the output with further prompts. You can also download and license the music for commercial purposes non-exclusively.

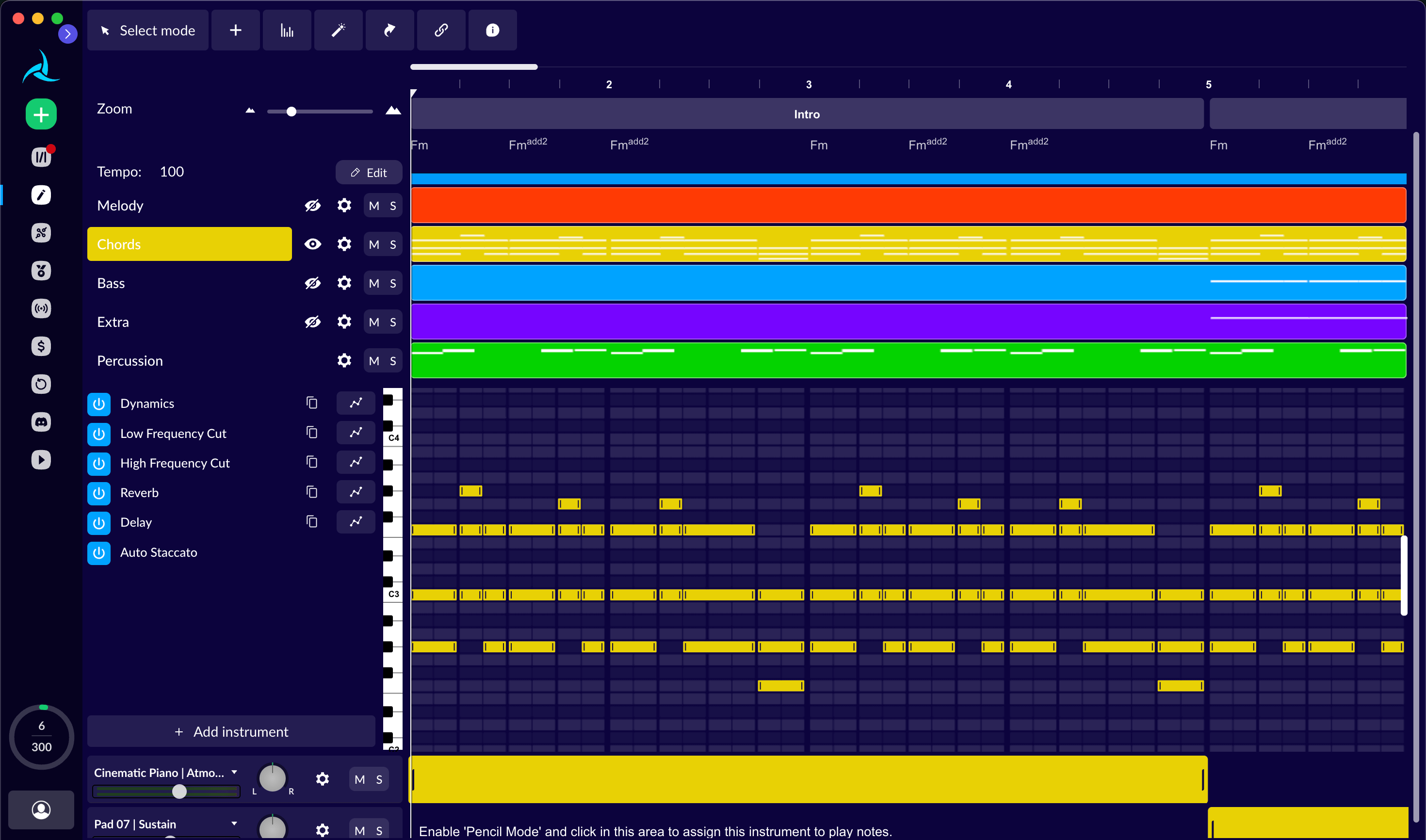

Branded as a ‘personal AI music generation assistant’, AIVA similarly allows you to generate songs in a matter of seconds across 250 different styles of music. Its interface allows for highly customisable models you can adapt to suit your workflow.

Unlike Udio, it doesn’t do text-to-audio but has more of a compositional feel to it – you can select your instrumentation and programme or import MIDI files. It has loads of presets to choose from, and you can virtually build a track from scratch a bit like in GarageBand but with a clever AI assistant that allows you to generate a more sophisticated piece.

For example, you can add chord and ornament layers as well as set mixing parameters and overall master instructions. The company offers three tiers, from the free version which allows three downloads a month and the music to only be used for non-commercial purposes, to the £30/month pro tier that allows high-quality exports and means you own all the copyright to the tracks.

There are a number of tools out there that can help your production by assisting your sample selection or allowing you to extract sounds you need from other sources to then sample.

Some people I know began using LALAL.AI, one of the earlier vocal removers I heard about. However, this comes with a pricing structure where you pay by the number of minutes. So I prefer using the free Vocalremover, which has both a vocal remover and an excellent stem splitter.

Simply drag your track in, and it’ll analyse it in around a minute, and separate out sections such as vocals, drums, bass, percussion, pads, piano, and so on. You can either mix the levels of each track in their interface or download individual tracks/mute all but two tracks, which is amazingly useful if you want to sample vocals for an edit or take a percussion loop/break to sample. The site also has a pitch and tempo adjuster, as well as an editor and track joiner.

A number of industry-leading plugin developers have started to introduce AI-powered sample libraries. I’m a fan of the developer Waves Audio, who have recently introduced the COSMOS Sample Finder, which analyses and categorises all your samples for you.

It takes into account many factors such as the original instrument, timbre, style, key, BPM, but also crucially its sonic characteristics, renaming any wordless files into something useful like ‘Bamboo Flute Single Shot Short Note’. This allows you to search for samples on both concrete and more qualitative search terms like ‘warm’ or ‘clean’.

Another cool tool to check out is Jamahook, ‘the world’s only AI Sound Matching’ tech, which allows a certain number of sample downloads per month based on your subscription tier. This goes one step further than COSMOS – it analyses the track you’re working on in your DAW, then based on its current timbres, mood and genre, will suggest samples that match the project. If you like the sound of something, you can simply drag and drop the WAV file into whatever you’re working on.

Arguably one of the most useful areas where AI tools have made an impact for producers is in mixing and mastering. These are the final steps in finishing off a track and are mostly regarded as ‘technical’ processes. Having an algorithmically-driven approach to enhancing the sound of the piece you’re working on without impacting the ‘creative’ process can be really useful in getting a track polished to send out as demos, road test in clubs, and finalise pre-masters.

One of the best plug-in providers for mixing and mastering is Izotope, who have been using AI and machine learning for nearly a decade. Their programmes include:

Neutron: An AI mixing assistant, which analyses either single tracks or mix buses to apply EQ, compression, saturation, and stereo imaging processing. You can adjust its intensity up or down to achieve the desired results depending on how raw a sound you’re going for.

Ozone: Their AI mastering tool. It works similarly to Neutron, providing helpful analysis and comes loaded with great presets to hone in on the kind of master you’re looking for. It’ll even help match tone, width, and dynamics to a reference track.

RX 11: An industry-standard audio restoration tool. This is particularly useful if you’re bringing back to life a bunch of old, damaged, and imperfect samples.

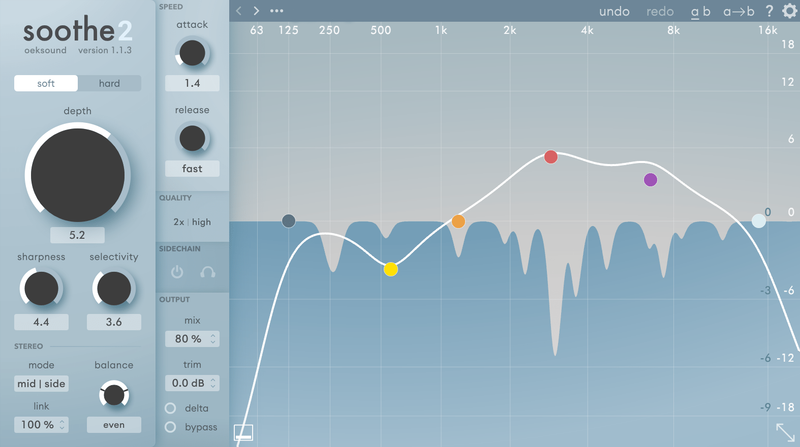

The boutique Oeksound’s Soothe 2 is a plugin many producers swear by. It uses advanced algorithmic processing to apply reactive EQ to your channel and is great at removing harsh or resonant frequencies. Honestly, give this a try and get familiar with the parameters - it’ll lead to a much more pleasing mixdown by preserving the essential frequencies. They also make a dynamic live version.

If you want a quick master, it’s definitely worth trying an AI service.

The best I’ve used are Landr, SoundCloud (powered by Dolby), and Waves, but I’ve heard good things about Masterchannel too.

For single tracks, they typically cost $5-10, and some services offer a monthly subscription model or, in the case of SoundCloud, you get 3 free tracks each month, then a cost applies with SoundCloud Pro.

These services usually work by allowing you to choose a section of the track, then tweak the intensity of a preset. Remember, mastering is too subjective and complex for an algorithm to get totally right - an AI can only really match target parameters based on the audio data it’s given, rather than understand how the music is supposed to ‘feels, which is where a professional engineer brings their expertise. So don’t use an AI master for a proper release!

One final way AI can supplement your creativity is through ‘collaboration’. Much like with the aforementioned Actress project, AI tools essentially allow you to jam with a programme, providing inspiration as you have something to bounce off.

If this sounds appealing, check out Magenta Studio, the Google-developed project which integrates with Ableton Live. It has several features that allow you to input your ideas before using the predictive power of recurrent neural networks to vary or generate further ideas based on what you came up with.

As with any new innovation, there is a degree of the unknown that makes using these tools exciting. Given that we are just at the beginning of how powerful AI will become, it is important to bear in mind the challenges and ethical considerations that accompany it in relation to music.

Any tool will be trained on a specific dataset and subject to some kind of bias that will skew the original training data or AI algorithm. By and large, everything will be trained on material that tends towards commercially popular music - be that a generative programme that creates original melodies or a mastering preset in Ozone. So you are likely to get results that tend towards generic-sounding music, particularly when you apply AI tools to your process without much of your own input.

This is tied into the idea of a loss of creativity and the thorny issue of copyright. It’s important not to get over-reliant on these tools, so do only incorporate them in ways that you find enhance your creativity, rather than replace it.

In the long-term, you will simply lose your enjoyment and skill the more you sit back and let an algorithm tell you what to do. Also, there is indeed a grey area at the moment - if you use Magenta to generate 36 bars based on what you’ve written, you still cannot claim ownership of the music it’s produced, but using an AI mixing tool doesn’t affect the originality of the IP. Don’t be scared to use new tools, but always be transparent where you’ve used AI.

Finally, like any discipline, keep yourself informed so you are continuously learning and adapting. Great new inventions are cropping up all the time, ones that could help you solve particular production problems you come up against, improve your mixdowns, provide inspiration, and give your composition and arrangements an added edge.